A class act

This month the UK’s Department of Education published generative AI guidance for educators. Developed by Chiltern Learning Trust and Chartered College of Teaching, it outlines appropriate uses of generative AI in and outside of the classroom. Their advice includes using Gen AI for ‘routine’ letters to parents and marking “low-stakes” homework such as quizzes, in an effort to reduce the time teachers have to spend doing administrative tasks.

If this really is a push for educators to be more ‘productive’ and focus on their teacher-student relationships, then how does using generative AI promote this? Will teachers be expected to share when they use generative AI with parents and students? Once some students find out that their teacher used ChatGPT, they tried to sue the college for their fees to be refunded, saying “From my perspective, the professor didn’t even read anything that I wrote.”

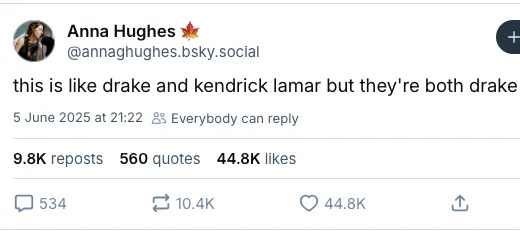

And what happens when it’s one rule for the students and another for the teachers? Since the launch of ChatGPT so much of the conversation has been focused on its use by students. In The Government’s Educational blog post released an ‘AI In Schools and Colleges: What You Need to Know’, they provide guidance for how teachers can use AI but then go on to say it’s up to each school to decide how students should use AI. This two tier system of who can and can’t use generative AI is not going to help create healthier relationships between students and teachers.

I remember when ChatGPT was introduced and flooding classrooms. Many observers (not educators) spoke about how this is a good thing, and would make us rethink what education could be. Moving away from tick box activities to real deep learning and considerations. I do understand where they were coming from. But then there was no mobilisation to help this happen - no funding for teachers and schools to create these new holistic systems. Educators just had to find unique ways to circumvent these tools.

And teachers are struggling. The rise of generative AI is making classrooms pretty grim. 404 media shared stories from teachers across the USA, about what it’s like to teach today.

“They describe trying to grade “hybrid essays half written by students and half written by robots,” trying to teach Spanish to kids who don’t know the meaning of the words they’re trying to teach them in English, and students who use AI in the middle of conversation.”

I think one of the stand out quotes is a teacher saying “They don’t have interests. Literally, when I ask them what they’re interested in, so many of them can’t name anything for me.” AI Slop is here and it’s in the classrooms.

Which is why guidance for using generative AI tools in the classroom feels so misdirected. It’s not like teachers are unproductive. They don’t need more productivity tools - they need to be paid more! They need more time and to reduce the amount of work on their plate. We can’t think that their plate-load is fine and all they need to do is eat quicker.

Smart Check It Out

There is a long history of countries implementing social service AI systems going wrong. We’ve had Australia's Robodebt, Netherlands’s child tax scandal and India's automated food system to name a few.

But with the rise of "responsible AI” methodologies, Amsterdam’s council thought they could approach it differently. A team composed of AI ethicists, various civil society consultants, Accenture (lol) and developers worked on Smart Check - a fraud detection system for welfare claims.

In March 2023, after months of testing and building, the Amsterdam council released it. By November 2023 the pilot was shut down, due to biases found in the system - “The city could not “definitely” say that “this is not discriminating.”

In a brilliant joint investigation from MIT Technology Review, Lighthouse Reports, and Trouw, three journalists are given a surprising look behind the development process, data and analytical models to understand what went wrong. They spoke to people who have been part of the long development process and the silenced birds in the canary.

It’s a really great read, that gives a lot of breathing room to both sides, before landing some great punches about the way we hold different systems (AI and humans) to accounts, and why these systems are always focused on punishment. We have to learn when things do go wrong, rather than just to move on. Releasing this data like this is rare, but I for one am glad to see an unsuccessful tool exposed with some transparency.

Woooooah Wayve Mate

Have you heard of Lime Legs? It’s a phrase a friend shared over dinner, one she heard from a doctor. The rise in Lime Bikes has led to an equal rise in injuries caused by heavy electronic bikes falling onto people’s legs.

Now we’re hearing that Uber are partnering with autonomous vehicle developer Wayve and trialing self driving taxis in the UK’s capital city in Spring 2026. Because yes, London, which is famous for very complicated old roads and boroughs (unlike the straight grid like roads of America) is a perfect place to test customer facing taxis. Not to mention when we’ve tested out a driverless system before in Edinburgh 2023, it was shut down for a lack of passengers.

So I wonder what term we’ll come up with when Wayve’s start injuring people at similar rates to Lime bikes? Waaaaaaayve your limbs away? Wrecked Wayves? Late night Wave-ers? Send us your ideas.

One more thing:

A rare good book about a true crime

A Bonnie Blue and Andrew Tate collaboration is something I can’t process right now

Join us on 16th July for a workshop at Somerset House about digital legacy. Through guided creative activities, we explore how we want to (if at all) exist online once we have passed away. Book your free tickets. A soft conversation about hard topics.